Join us at I/ITSEC 2025 in Booth #1161 from December 1-4 at the Orange County Convention Center – South Concourse to see demonstrations of the projects below and more.

Be There Without Being There: Humanoid Robot Immersive Telepresence

Be There Without Being There: Humanoid Robot Immersive Telepresence

This project unveils a breakthrough prototype that fuses real-time digital twin generation with immersive human telepresence through a humanoid robot. A human immersed in a virtual environment teleoperates a humanoid robot at a remote location as a true extension of their own body, regardless of how far away it is. Through advanced devices and sensors, the operator’s movements are mapped directly onto the robot, enabling fluid, natural, full-body remote interactions. The humanoid robot is equipped with real-time data capture sensors that continuously capture, map, and construct a digital twin of its surroundings as it moves and explores them. This live reconstruction is displayed directly back into the immersive space delivering deep situational awareness and empowering the human operator to see, sense, and interact with the remote4 world intuitively and confidently. By embodying the robot, users experience distant environments as though they were physically there. This is the next frontier of human telepresence.

Showcase Schedule: Monday 2-4, Tuesday 2-4, Wednesday 12:00-3:30, Thursday 11:30 – 1:30

Restoring and Augmenting Touch: Thermal Feedback for Human–Machine Interfaces

Receiving sensory feedback during interaction with both the physical and the virtual environment is essential for successful motor control. However, during XR interactions or for individuals with prosthetic hands, this sensory feedback is often absent. The lack of sensation can lead to issues such as reduced embodiment of the device, such as a prothesis, diminished fine-motor precision and increased cognitive load. To address this, we are presenting a thermal glove system that delivers electrically generated signals to the skin providing non-invasive sensory feedback. This system opens our research to explore both invasive and non-invasive neuromorphic encoding methods to restore or augment naturalistic sensation.

Showcase Schedule: Monday 4-6, Tuesday 12-1, Wednesday 11:30-1:30 & 3:30-6, Thursday 9:30-12:00

Performance Innovation in High-Risk Operations

Performance Innovation in High-Risk Operations

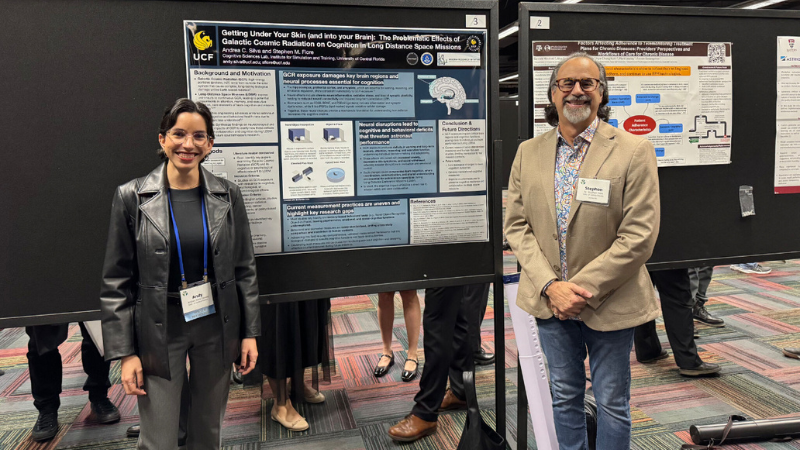

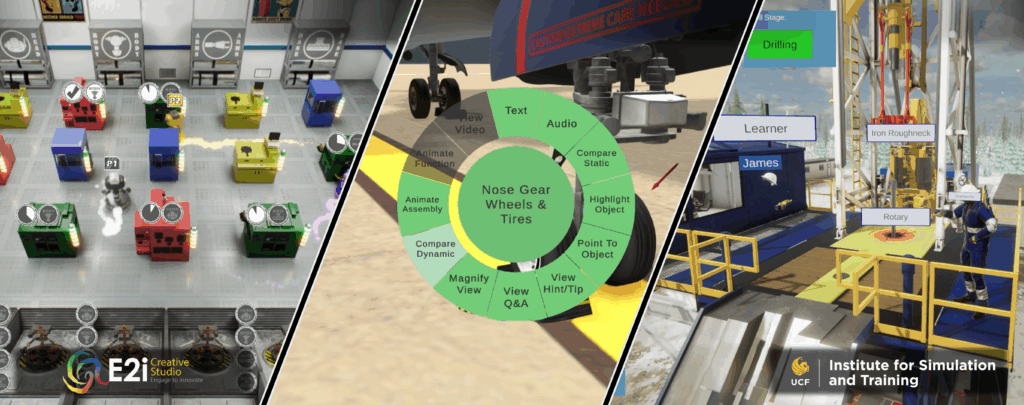

Innovation adoption is the thrust for E2i Creative Studio’s transformative research with partners. Purposeful design, iterative development and contextual validation in real-world operating environments is our formula for delivery of our flexible, customizable learning ecosystems.

Showcase Schedule: Monday 3-6, Tuesday 12-3, Wednesday 3-6, Thursday 12:30-3:00

EarthXR: An Interoperable Digital Twin Platform from Surface to Space

EarthXR is a next-generation prototype of an immersive, seamless collaborative framework for ground-to-Moon digital twin environment. It fuses real-time data from multi-model sensors with real-time and continuously updating simulations to create a living, predictive, and adaptative digital twin of the Earth System, its orbital domain, and the Moon surface. By integrating live sensor feeds, EarthXR enables users to see and monitor the present while it also enables deliberate, controlled departures from reality to test “what if” scenarios at mission critical speeds. It also enables “rewinding” the system, that is, going back on time to reconstruct, analyze, and understand an event or situation and the pathways that led to the given outcome. This seamless integration of real-data streams and high-fidelity simulations and predictions provides continuous insight, enabling users to monitor system behavior and maintain real-time situational awareness while anticipating impacts, testing strategies, planning missions, evaluating risks and optimizing actions across terrestrial and orbital missions all in a single integrated platform.

Showcase Schedule: Monday – Thursday, throughout the day

iHELP (Intelligent Holistic Emergency Logistics Platform): An Immersive Decision Support System for Disaster Response

iHELP (Intelligent Holistic Emergency Logistics Platform) is a prototype of a decision support system for humanitarian aid and disaster response. Traditional planning can’t keep up with the dynamic, time-sensitive nature of a crisis. iHELP’s optimization and simulation engine produces reliable, multi-modal transportation plans at interactive rates enabling evaluation of multiple scenarios. Our demonstration highlights iHELP’s integration on the UCF’s EarthXR immersive digital twin. With iHelp, instead of sorting through spreadsheets, planners can see the full operation unfold in real time, having greater insight into the situation and therefore a more informed and confident decision process for the plan. iHELP has been designed to address the planning challenges of military logistics teams, emergency management agencies, and international NGOs that need fast, informed decisions in complex environments.

Showcase Schedule: Monday – Thursday, throughout the day

Urban Digital Twins for Emergency Management

The project showcases how diverse data sources can be fused to assess risk in real-time during a disaster. Open Source geospatial standards, including CityGML, 3DTile, GeoPose, and Building Information Model (BIM), are integrated with live urban traffic feeds, camera imagery and recorded video. After image segmentation and risk analysis, a spatial clustering model maps and highlights varying risk levels across urban and regional areas. This integrated environment provides faster, clearer situational awareness to support much better informed and safer emergency decisions.

The project showcases how diverse data sources can be fused to assess risk in real-time during a disaster. Open Source geospatial standards, including CityGML, 3DTile, GeoPose, and Building Information Model (BIM), are integrated with live urban traffic feeds, camera imagery and recorded video. After image segmentation and risk analysis, a spatial clustering model maps and highlights varying risk levels across urban and regional areas. This integrated environment provides faster, clearer situational awareness to support much better informed and safer emergency decisions.

Showcase Schedule: Monday 2-4, Tuesday 12-2:30, Wednesday 3:30-6

PIXEL – An Industry Experimentation Lab for the Future

PIXEL (Performance Immersive Experience and Entertainment Lab) is your blank canvas – a dynamic, risk-free environment where you can test, pilot and explore innovative solutions without having to invest in new facilities. Purpose built for collaboration; the space blends the theatrical marvels of simulation with the artistic ingenuity of theatre in ways no other setting can. In this space, you define what’s possible!

Showcase Schedule: Tuesday 3-6:30, Wednesday 9:30-12, Thursday 12:30-3

![]()

![]()

DT4AI: Lidar Perception using High Fidelity Digital Twins

This demonstration showcases a scalable methodology for training LiDAR-based perception models using high-fidelity digital twins (HiFi DTs). The goal is to bridge the simulation-to-reality (Sim2Real) gap by replicating real-world environments, including road geometry, traffic flow, and sensor specifications within the CARLA simulator. Using only publicly available data (e.g., OpenStreetMap, satellite imagery), we generate synthetic point cloud datasets that match real-world distributions. These datasets have already been used to train 3D object detection models that outperform real-data-trained counterparts by over 4.8%. The technology is open-source and supports applications in intelligent transportation systems, disaster management, infrastructure monitoring, and defense applications. The target audience includes AI/ML researchers, simulation developers, military training and autonomy groups, and digital engineering teams interested in synthetic environments for perception model development and evaluation.

Showcase Schedule: Monday 4-6, Wednesday 12:30-3, Thursday 9:30-12

ABCs: AI, Blockchain, Cybersecurity for Advanced Visualization and Simulation

The Mixed Emerging Technology Integration Lab (METIL) will demonstrate our multimodal AI simulation work involving haptic gloves and advanced 3D displays for the Department of Energy (DoE) and other federal sponsors. METIL is using the SenseGloves for training in virtual glove box scenarios and tabletop exercises. This haptic glove technology spans multiple XR platforms including a holographic table. This research, sponsored by the DoE, is assessing the efficacy for training in virtual glove box environments versus VR with controllers, and traditional analog methods. We will have a selection of our multiplatform training content using the auto-stereoscopic Sony Spatial Reality Display. This is one of several 3D modalities we utilize for better decision making in complex situations. Additionally, we will showcase some of our Knights of the Round TablesTM AI Superteams research, blockchain applications, Quantum Cyber Awareness cards, and our current research across military, energy, space, and health.

Showcase Schedule: Monday 2-4, Tuesday 1-6:30, Wednesday 9:30-11:30 & 1:30-3:30, Thursday 12:00-3:00

Space Medicine: Training for Self-administered Imaging Tests

Extended Reality (XR) is emerging as a powerful tool for space medicine training, especially for teaching astronauts to perform self-administered tests in microgravity. Through immersive, interactive simulations, trainees can practice positioning equipment, capturing diagnostic images, and troubleshooting common issues without needing a medical expert to perform those actions. This project presents a hands-on virtual environment for this kind of training, helping astronauts to build confidence and procedural accuracy, with the goal of ensuring astronauts to reliably conduct ultrasound or other imaging tests during long-durations missions where timely medical assessment is critical.

Showcase Schedule: Monday 12-2, Tuesday 3-6:30, Wednesday 10:30-3, Thursday 9:30-12

Multimodal Embodied Human Digital Twin for Operational Readiness

This project introduces a prototype of a trusted companion digital team member (Human Digital Twin or HDT) that integrates multi-domain data sources, providing persistent, individualized support without replacing human judgment or command authority. It is able to “see” so it can be aware of the emotional and cognitive state of the humans around it, as well as understand the environment in which it needs to perform. It aligns human data (conversation, tone of voice, facial expressions, body language) with real-time scenario conditions (location, visible environment, input from sensors) to deliver context-aware insights to the humans through natural conversational interaction. As a trusted companion, the HDT provides situationally grounded feedback serving as a supportive partner designed to off-load cognitive load from the human teammates to enhance mission effectiveness. It functions as a human-centered system that accompanies the humans across the many situations and activities that they face during training, planning and executing a mission. It is intended to be an interactive partner to enhance human capability while remaining grounded by human decision-making.

This project introduces a prototype of a trusted companion digital team member (Human Digital Twin or HDT) that integrates multi-domain data sources, providing persistent, individualized support without replacing human judgment or command authority. It is able to “see” so it can be aware of the emotional and cognitive state of the humans around it, as well as understand the environment in which it needs to perform. It aligns human data (conversation, tone of voice, facial expressions, body language) with real-time scenario conditions (location, visible environment, input from sensors) to deliver context-aware insights to the humans through natural conversational interaction. As a trusted companion, the HDT provides situationally grounded feedback serving as a supportive partner designed to off-load cognitive load from the human teammates to enhance mission effectiveness. It functions as a human-centered system that accompanies the humans across the many situations and activities that they face during training, planning and executing a mission. It is intended to be an interactive partner to enhance human capability while remaining grounded by human decision-making.

Showcase Schedule: Monday – Thursday, throughout the day

Digital Twin for Search & Rescue Drone Operations

Drones have recently been used for Search & Rescue by taking images/videos of affected areas or dropping off payloads for victims; however, to operate in real time, a drone pilot must plan and continuously monitor the drone’s mission for new events. In this project, a digital twin for mission planning, execution, and monitoring is developed with bi-directional flow of data (disaster zones, travel routes, energy consumption) between the physical system (the drone) and the digital system (mission emulator and optimizer). The drone starts with predetermined disaster zones to visit, and if new zones are added midflight, it changes its route in real-time. Also, battery levels are continuously communicated with the digital twin to determine when to return for recharging safely.

Showcase Schedule: Monday 2-3, Wednesday 9:30-10:30

Recent Articles

Be Informed

Stay Connected

By signing up, you consent to receive emails from us. Your information will be kept confidential and will not be shared with third parties.